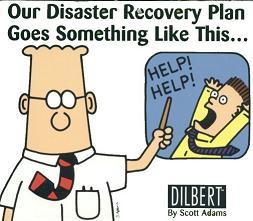

(DR/BC)*MBO=Formula for failure

This post on disaster recovery data center considerations was written by our EVP of Sales and Business Development, Jeff Barber, on LinkedIn and republished here on our blog since it’s still relevant to our customers.

The photo above is by Kelly Sikkema on Unsplash

The purpose of this article is to highlight just some of the critical questions and potential pitfalls that should be understood prior to designing, paying for and implementing a DR/BC strategy. I am of course over simplifying in the interest of creating a post of reasonable length, but those of you who have been involved in these projects can easily fill in the blanks and add comments-I think that would be great. Those who have not been through this type of project will hopefully gain some new insights and be better prepared for the true scope of this.

During my tenure in the storage and networking world, my most enjoyable projects were DR/BC engagements. Not only were they very large/strategic projects, they were all very different and complex (and typically well funded). Significant variables such as: company culture, criticality/value of their data and most importantly the level of executive commitment were always different. Occasionally I will still participate on consulting engagements via services companies who connect people like me with their clients (companies like Gersen Lehrman Group-GLG for instance). Typically the clients are just looking for a 1-3 hours of guidance, or 3rd party validation of in-house findings. It is a simple and good way to keep yourself up to speed, and build your personal reference list. Your fees can even go to the charity of your choice (or your pocket…either way). This is the type of engagement I describe below, very high level.

Several weeks ago I completed a three-hour project for pre-planning a DR/BC initiative. This client is not a tech company, but they are a sophisticated engineering company…their data consists of manufacturing plans and various certifications and QA data. They had all the traditional data as well: email, HRMS, ERP, etc…The goal was to implement “DR”, in order to comply with a requirement from a very large new customer. Their current plan essentially consisted of “recover from tape”. Under some definitions they already DR I suppose. However, the fact that a customer requirement forced the high level discussion was somewhat concerning, but at least they were starting to look at the exposure.

The Executive sponsor was on the initial call, and spent ~20 minutes explaining how critical this project is, and how the Board was watching this closely. “It is imperative the team complete a successful implementation of Disaster Recovery by xx date”, and how “we could all count on his full support when the team needed anything to make that happen”. He provided several well defined goals of how this DR project would enable the company to be “back up and running within xx hours” of a disaster, and proposed the team should “search for ways to turn this in to a positive ROI”. The structure he used to communicate the mission and milestones of the project was somewhat robot like…but I had the time to listen…He then promptly said something we hear often when the exec sponsor believes things are under control: “unfortunately I need to drop this call so I can make another important meeting…”

A very well scripted kickoff speech. In fact, it sounded more like the terms of an MBO acknowledgment form than a genuine statement. After all, the project had not really kicked off yet, how could he have such clean and tidy “accomplishments” and timelines documented?

I took only three notes from his opening comments, which were more than enough to fill all of the allotted time, and provide weeks of internal discussions for them. Which is actually a very good thing because they are early in the process; BC planning and implementations are not simple-they are complex and deserve much more than a cursory glance and some “fly-by management”. The comments we discussed:

- “Disaster Recovery by xx Date“, in conjunction with: “up and running within xx hours of a disaster” The company was clearly using the terms Disaster Recovery and Business Continuance as synonyms. DR will typically just protect the data, not the ability to run the business using that data. BC can mean many things, but essentially it means a secondary environment which can be failed-over to, and be complete with some level of networking, applications, data, user authentication, etc…Perhaps not production performance, but some critical capabilities. A much deeper level of commitment than just replication of the data (which is typically DR).

- “up and running” Wow…this needs a definition. What does this comment apply to? All applications? All Users? Access to all data? etc…there were some very broad goals being set, and the timelines significantly under estimated the heavy lifting it would take to answer these questions. He was not a bad person or a bad executive. What he was doing is trying to oversimplify a very complex project, with hundreds of moving parts. However, the company was enlightened enough to seek and pay for some guidance early in the process-The project mission and milestones can always be restated in the future, better to know the truth now.

- “search for ways to turn this into a positive ROI…” Although possible I suppose, this would take a massive commitment to a completely mirrored environment inclusive of compute, network, bi-directional replication, etc…using the failover site for backup processes and/or additional workloads is possible, but it is advanced and expensive. The team members should be prepared to set a different expectation on this one…

This company had multiple data centers, so they wanted to keep this in-house. I spoke about several cloud based offerings from various companies, but they had no interest due to concerns about security, their IP, privacy of customer data, etc…not all 100% legitimate, but my role was not to preach about the advancements of the cloud space in the last few years (although this company has been using Salesforce.com for many years…they could not get over the security concerns for other aspects of their data. I suppose they did not associate salesforce with “cloud”…)

My deliverable was only to identify holes in their project scope, and provide additional feedback on their direction and proposed timelines. Essentially nudge them in the directions I thought correct. Some of the feedback provided as proposed next steps for them:

Learn the Definitions: There was a lack of education here. They must truly understand the differences between Disaster Recovery and Business Continuance. One can be as simple as a secure copy of your data, the other can be a massive investment in time and resources to replicate your entire production environment. Determine where you fall within this spectrum of options. Define what the RPO and RTO agreements truly mean to your company and its infrastructure. Be ready to have some tough conversations with the executives approving the budget. A RPO of “zero data loss” is possible for instance, but it will come at a cost. An RTO of 1-hour is possible…you guessed it-expensive. Especially if you are referring to applications sensitive to latency.

Need to Conduct Internal Investigations: In order to decide which is right for your company, you need to complete a lot of ground work and company introspection, which will most likely require surveys and/or audits. This element is a large project unto itself:

- What type of data? It is not all created equal, so document what you have and decide what is mission critical. Is your server environment virtualized? What impact will that have on this project if so?

- What value do these data have to your business? How much can you lose? What would be the potential cost of loss? (these questions will be Board level decisions in most companies).

- What kind of applications do you depend on? Are they sensitive to the inherent latencies that replication can bring? How much time can you be offline? What does your current bandwidth and carrier capabilities look like? How far is that target site you want to use…it matters…Do you have adequate bandwidth and carrier redundancy?

- What do your workloads look like? Can you “get by” with less performance at the failover site? What if disaster strikes during quarter or year-end close? Can the failover environment support those increased workloads? Must they?

Properly Set Internal Expectations: Once the process begins of understanding these questions, and the implications of the RPO/RTO agreements are digested, Panic will set in…You will realize that all of this work and money will be wasted without a comprehensive plan of HOW AND WHEN TO USE THE ENVIRONMENT. Many more questions will need to be addressed, outside the technical realm. Replicating the data will look easy compared to the operational decisions.

For Instance: Something as “simple” as: what is the definition of a disaster? Who decides when to failover to another site? What if a critical application such as SAP goes down, but the others are still up and running? Do you fail over everything to get SAP back up? Who is the backup executive if the primary cannot be reached, or worst case is no longer alive? Do all employees understand the protocol for potentially different sign-on procedures (all the data being safe is great, but can everyone easily authenticate to access that data)? There are many, many more…but you get the gist of what I’m saying here: You can have this GOOD, FAST or CHEAP: Pick any two…

Create the Plan, Learn the Plan, Test the Plan, Evaluate the Plan-Forever: The best and most critical environments never allow this project to end. They produce a living document and they exercise the machine often through consistent drilling and evaluation of the drill performance. Yes, expensive and disruptive…plan for it. The maintenance requirements for production and the failover site are identical in true Business Continuance plans. This fact must be documented and agreed to.

In summary I told them; This takes dedication and resources…Get some outside help and set realistic expectations. Beware of the temptation to “check the box…” of having a plan and infrastructure when you do not (i.e. the head in sand / fingers crossed strategy).

The argument will be made that disasters are very rare, and this may not be worth all the expense and impact. This is a valid point from the perspective that it will support the concept of understanding and documenting which applications and employees require true Business Continuance. The reality is that not all data or applications are truly mission critical…If you wish to exclude certain environments from BC, you first need to understand the criticality of each of those environments. During the interview process they will all claim “mission critical” status.

Beware of the application inter-dependency too. Many apps you declare as Tier-2, will be “feeders” to Tier-1. ET&L processes/Data warehouse is a good example. The reports produced may be Tier-1, but many of the data pools or templates they extract from may not be…Tier-2 apps can be graduated to Tier-1 by association.

In the end I recommended they revisit the Cloud-based option again, and focus on the SLA’s with that supplier to meet customer and Board requirements. Ensure the potential providers address the concerns directly in their proposal. This company’s particular DR/BC mountain was just too difficult to climb in the allotted timelines, which typically dictates outsourcing of the function or resetting the goals. Please add your comments and questions!

If you’d like to continue the conversation with Jeff, feel free to comment below or on his original LinkedIn post. If you’d like to schedule a meeting directly with him about how Prime Data Centers can help your company, give us a call or email.